Updated: February, 2017

Over the years I’ve spent a fair amount of time thinking about radiometric dating techniques as compared to other means of estimating elapsed time. But why is this topic so interesting and important for me? Well, for many former and even current Seventh-day Adventists, radiometric dating of the rocks of the Earth presents a serious problem when compared to the apparent claims of the Bible regarding a literal 7-day creation week – and many have voiced such concerns over the years (Link). After all, according to nearly all of the best and brightest scientists on the planet today, life has existed and evolved over many hundreds of millions of years.

Over the years I’ve spent a fair amount of time thinking about radiometric dating techniques as compared to other means of estimating elapsed time. But why is this topic so interesting and important for me? Well, for many former and even current Seventh-day Adventists, radiometric dating of the rocks of the Earth presents a serious problem when compared to the apparent claims of the Bible regarding a literal 7-day creation week – and many have voiced such concerns over the years (Link). After all, according to nearly all of the best and brightest scientists on the planet today, life has existed and evolved over many hundreds of millions of years.

But how can they be so sure? Their confidence is primarily based on the fact that radioactive elements decay or change into other elements at a constant and predictable clock-like rate. And, these radiometric “clocks” certainly appear to show that living things have in fact existed and changed dramatically on this planet over very very long periods of time indeed!

So, why is this a problem for so many within the church? Well, the Seventh-day Adventist Church, in particular, takes the Bible and the claims of its authors quite seriously. Of course, this creates a problem…

Table of Contents

- 1 What did the Authors of the Bible Intend?

- 2 The Basic Concept Behind Radiometric Dating:

- 3 The Potassium-Argon Dating Method:

- 4 The Argon-Argon Method:

- 5 The Uranium-Lead Dating Method:

- 6 Cosmogenic Isotope Dating:

- 7 Fission Track Dating:

- 8 Carbon 14 Dating:

- 9 Assuming A Literal Creation Week and a Noachian-Style Flood:

- 9.1 Before the Flood:

- 9.2 Lord Kelvin and the Age of the Earth:

- 9.3 Increased Radioactive Elements on the Surface of the Planet:

- 9.4 Where did All the Water Come From? and Go?

- 9.5 Bioturbation:

- 9.6 Warm world:

- 9.7 World-wide Paleocurrents:

- 9.8 Lack of Ocean Sediment:

- 9.9 Lack of Erosion:

- 9.10 DNA Mutation Rates as a Clock:

- 9.11 DNA Decay – Devolution not Evolution:

- 9.11.1 Overall mutation rate:

- 9.11.2 Functional DNA in the Genome:

- 9.11.3 Implied functional mutation rate:

- 9.11.4 Ratio of beneficial vs. detrimental mutations:

- 9.11.5 Detrimental mutation rate:

- 9.11.6 Required reproductive/death rate to compensate for detrimental mutation rate:

- 9.11.7 But what about the effect of beneficial mutations?

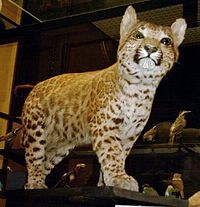

- 9.12 Rapid Speciation:

- 9.13 Circaseptan (7-day) circadian rhythms:

- 9.14 Related

What did the Authors of the Bible Intend?

The authors of the Bible are quite consistent in their claims that life did not evolve from simple to complex over vast eons of time via a very bloody and painful process of survival of the fittest. Rather, these authors claim that God showed them that all the basic “kinds” of living things on this planet were produced within just seven literal days and that there was no death for any sentient creature until the Fall of mankind in Eden. It is also quite clear that these authors were actually trying to convey a literal historical narrative – not an allegory. They actually believed that what they wrote was literal history. Take, for example, the comments of well-known Oxford Hebrew scholar James Barr:

The authors of the Bible are quite consistent in their claims that life did not evolve from simple to complex over vast eons of time via a very bloody and painful process of survival of the fittest. Rather, these authors claim that God showed them that all the basic “kinds” of living things on this planet were produced within just seven literal days and that there was no death for any sentient creature until the Fall of mankind in Eden. It is also quite clear that these authors were actually trying to convey a literal historical narrative – not an allegory. They actually believed that what they wrote was literal history. Take, for example, the comments of well-known Oxford Hebrew scholar James Barr:

Probably, so far as I know, there is no professor of Hebrew or Old Testament at any world-class university who does not believe that the writer(s) of Genesis 1–11 intended to convey to their readers the ideas that: (a) creation took place in a series of six days which were the same as the days of 24 hours we now experience. (b) the figures contained in the Genesis genealogies provided by simple addition a chronology from the beginning of the world up to later stages in the biblical story (c) Noah’s flood was understood to be world-wide and extinguish all human and animal life except for those in the ark. Or, to put it negatively, the apologetic arguments which suppose the “days” of creation to be long eras of time, the figures of years not to be chronological, and the flood to be a merely local Mesopotamian flood, are not taken seriously by any such professors, as far as I know.

Letter from Professor James Barr to David C.C. Watson of the UK, dated 23 April 1984.

For many, this sets up quite a conundrum. What to believe? – the very strong consensus of the most brilliant minds in the world today pointing to what seems to be overwhelming empirical evidence? – or the “Word of God” in the form of the claims of the human writers of the Bible? How does one decide between these two options? For me, I ask myself, “Where is the weight of evidence” that I can actually understand for myself?

Now, I can only speak for myself here, but for me the weight of evidence and credibility remains firmly on the side of the Bible. One of the many reasons that I’ve come to this conclusion is that I’ve studied the claims of many Biblical critics for most of my adult life, to include the very popular claims of the modern neo-Darwinian scientists, and I’ve found them to be either very weak or downright untenable – and this includes the popular claims regarding radiometric dating methods in general, which will be the focus of this particular discussion.

The Basic Concept Behind Radiometric Dating:

All radiometric dating methods are based on one basic concept. That is, radioactive elements decay at a constant rate into other elements – like a very steady and reliable clock. Of course, it is now known that this rate is somewhat variable and can be affected by solar flares and other factors (Link). However, from what is known so far, the degree of variation caused by these factors appears to be fairly minimal. So, the ticking of the clock itself still remains fairly predictable and therefore useful as a clock. Of course, in order to know how long a clock has been ticking, one has to know when it started ticking. Also, even if the actual ticking of the clock is reliable, one has to know if any outside influence has been able to move the hands of the clock beyond what the mechanism of the clock itself can achieve. Of course, this is where things get a little bit tricky…

The Potassium-Argon Dating Method:

The only “Pure” Method:

Why do I start with the potassium-argon (K-Ar) dating method? Well, for one thing, it is the only radiometric dating method where the “parent”, or starting radioactive “isotope” or element in a volcanic rock or crystal, can be pure – without any “daughter” or product isotope already present within the rock or crystal that one is trying to date.

Why do I start with the potassium-argon (K-Ar) dating method? Well, for one thing, it is the only radiometric dating method where the “parent”, or starting radioactive “isotope” or element in a volcanic rock or crystal, can be pure – without any “daughter” or product isotope already present within the rock or crystal that one is trying to date.

“The K-Ar method is the only decay scheme that can be used with little or no concern for the initial presence of the daughter isotope. This is because 40Ar is an inert gas that does not combine chemically with any other element and so escapes easily from rocks when they are heated. Thus, while a rock is molten, the 40Ar formed by the decay of 40K escapes from the liquid.”

The Most Common Method:

Because of this feature where only the parent product starts off in a solidifying rock, and because of its relative abundance within the rocks of the Earth, the K-Ar dating method is by far the most popular in use today. Around 85% of the time, the K-Ar method of dating is used to date various basaltic rocks from around the world.

Because of this feature where only the parent product starts off in a solidifying rock, and because of its relative abundance within the rocks of the Earth, the K-Ar dating method is by far the most popular in use today. Around 85% of the time, the K-Ar method of dating is used to date various basaltic rocks from around the world. radioactive 40K decays into 40Ar, the 40Ar gas becomes trapped in the solid crystals within the lava rock – and can’t escape until they are reheated to the point where the 40Ar gas can again escape into the atmosphere. So, all one has to do to determine the age of a volcanic rock is heat up the crystals within the rock and then measure the amount of 40Ar gas that is released compared to the amount of 40K that remains. The ratio of the parent to the daughter elements is then used to calculate the age of the rock based on the known half-life decay rate of 40K into 40Ar – which is around 1.25 billion years. Actually, 40K decays into two different daughter products – 40Ca and 40Ar.

radioactive 40K decays into 40Ar, the 40Ar gas becomes trapped in the solid crystals within the lava rock – and can’t escape until they are reheated to the point where the 40Ar gas can again escape into the atmosphere. So, all one has to do to determine the age of a volcanic rock is heat up the crystals within the rock and then measure the amount of 40Ar gas that is released compared to the amount of 40K that remains. The ratio of the parent to the daughter elements is then used to calculate the age of the rock based on the known half-life decay rate of 40K into 40Ar – which is around 1.25 billion years. Actually, 40K decays into two different daughter products – 40Ca and 40Ar. However, since the original concentration of calcium (40Ca) cannot be reasonably determined, the ratio of 40K vs. 40Ca is not used to calculate the age of the rock. In any case, the logic for calculating elapsed time here appears to be both simple and straightforward (see formula for the calculation). Basically, when half of the 40K is used up, 625 million years have passed – simple!

However, since the original concentration of calcium (40Ca) cannot be reasonably determined, the ratio of 40K vs. 40Ca is not used to calculate the age of the rock. In any case, the logic for calculating elapsed time here appears to be both simple and straightforward (see formula for the calculation). Basically, when half of the 40K is used up, 625 million years have passed – simple!When Some Argon gets Trapped:

What happens, though if all the 40Ar gas does not escape before the lava solidifies and the crystals within it have already started to form? Well, of course, some of the 40Ar gets trapped. This is called “extraneous argon” in literature. Experimental studies done on numerous modern volcanoes with historically known eruption times have been evaluated. And, about a third of the igneous rocks from these volcanoes show a bit of extra 40Ar – usually enough extra 40Ar to make the clock look older by a few hundred thousand to, rarely, up to a couple million years. Overall, however, such errors are relatively minor compared to the ages of rocks usually evaluated by K-Ar dating (on the order of tens to hundreds of millions of years). So, although often cited by creationists as somehow devastating to the credibility of K-Ar dating, this particular potential for error in the clock actually seems rather minor – relatively speaking (see illustration). It certainly doesn’t seem to significantly affect the credibility of rocks dated by the K-Ar method to tens or hundreds of millions of years – at least not as far as I have been able to tell. So, where’s the real problem?

What happens, though if all the 40Ar gas does not escape before the lava solidifies and the crystals within it have already started to form? Well, of course, some of the 40Ar gets trapped. This is called “extraneous argon” in literature. Experimental studies done on numerous modern volcanoes with historically known eruption times have been evaluated. And, about a third of the igneous rocks from these volcanoes show a bit of extra 40Ar – usually enough extra 40Ar to make the clock look older by a few hundred thousand to, rarely, up to a couple million years. Overall, however, such errors are relatively minor compared to the ages of rocks usually evaluated by K-Ar dating (on the order of tens to hundreds of millions of years). So, although often cited by creationists as somehow devastating to the credibility of K-Ar dating, this particular potential for error in the clock actually seems rather minor – relatively speaking (see illustration). It certainly doesn’t seem to significantly affect the credibility of rocks dated by the K-Ar method to tens or hundreds of millions of years – at least not as far as I have been able to tell. So, where’s the real problem?Function of Pressure and Rates of Cooling:

Under Water:

As it turns out, the amount of excess 40Ar is a direct function of both the hydrostatic pressure and the rate of cooling of the lava rocks when they form – under water (Dalrymple and Moore, 1968). This means that, “many submarine basalts are not suitable for potassium-argon dating” (Link). This same rather significant problem is also true for helium-based dating (decay of uranium and thorium produces 4He). For example, “The radiogenic argon and helium contents of three basalts erupted into the deep ocean from an active volcano (Kilauea) have been measured. Ages calculated from these measurements increase with sample depth up to 22 million years for lavas deduced to be recent. Caution is urged in applying dates from deep-ocean basalts in studies on ocean-floor spreading” (Link).

As it turns out, the amount of excess 40Ar is a direct function of both the hydrostatic pressure and the rate of cooling of the lava rocks when they form – under water (Dalrymple and Moore, 1968). This means that, “many submarine basalts are not suitable for potassium-argon dating” (Link). This same rather significant problem is also true for helium-based dating (decay of uranium and thorium produces 4He). For example, “The radiogenic argon and helium contents of three basalts erupted into the deep ocean from an active volcano (Kilauea) have been measured. Ages calculated from these measurements increase with sample depth up to 22 million years for lavas deduced to be recent. Caution is urged in applying dates from deep-ocean basalts in studies on ocean-floor spreading” (Link).Within Pre-formed Rock:

The “True Age” When K-Ar Dating Goes Wrong:

So, what is the “true” age of these rocks? If the K-Ar levels cannot be trusted, what other clock is more reliable? In this line consider that the Himalayan mountains are thought, by most modern scientists, to have started their uplift or orogeny some 50 million years ago. However, in 2008 Yang Wang et. al. of Florida State University found thick layers of ancient lake sediment filled with plant, fish and animal fossils typically associated with far lower elevations and warmer, wetter climates. Paleo-magnetic studies determined that these features could be no more than 2 or 3 million years old, not tens of millions of years old. Now that’s a rather significant difference. In an interview with Science Daily in 2008, Wang argued:

So, what is the “true” age of these rocks? If the K-Ar levels cannot be trusted, what other clock is more reliable? In this line consider that the Himalayan mountains are thought, by most modern scientists, to have started their uplift or orogeny some 50 million years ago. However, in 2008 Yang Wang et. al. of Florida State University found thick layers of ancient lake sediment filled with plant, fish and animal fossils typically associated with far lower elevations and warmer, wetter climates. Paleo-magnetic studies determined that these features could be no more than 2 or 3 million years old, not tens of millions of years old. Now that’s a rather significant difference. In an interview with Science Daily in 2008, Wang argued:

“Major tectonic changes on the Tibetan Plateau may have caused it to attain its towering present-day elevations, rendering it inhospitable to the plants and animals that once thrived there as recently as 2-3 million years ago, not millions of years earlier than that, as geologists have generally believed. The new evidence calls into question the validity of methods commonly used by scientists to reconstruct the past elevations of the region. So far, my research colleagues and I have only worked in two basins in Tibet, representing a very small fraction of the Plateau, but it is very exciting that our work to-date has yielded surprising results that are inconsistent with the popular view of Tibetan uplift.” ( Link )

Now, I’m sure that if the organic remains in this region were subjected to carbon-14 dating, that ages less than 50,000 years would be produced. After all, given the significant discrepancy suggested already, I’m not sure why Wang didn’t go ahead and try to carbon date these lake sediments? In any case, this finding of 2-3 Ma for lack sediments still contrasts sharply with mainstream thinking that these regions should be around 50 million years old.

Too Old or Too Young:

What this means, then, is that different methods of measuring elapsed time over very long periods of time often yield very very different results. Sometimes, the K-Ar ages is far to old. And, sometimes, the K-Ar age is far too young. For example, isotopic studies of the Cardenas Basalt and associated Proterozoic diabase sills and dikes have produced a geologic mystery. Using the conventional assumptions of radioisotope dating, the Rb-Sr and K-Ar systems should give the same “ages”. However, it has been known for decades now that these two different methods actually give very different or “discordant” ages with the K-Ar “age” being significantly younger than the Rb-Sr “age”. Various explanations, such as argon leakage or that a metamorphic event could have expelled significant argon from these rocks haven’t panned out (Link). The reason for this, as cited by the New Mexico Research Lab, is that the basic assumptions behind K-Ar dating cannot be known with confidence over long periods of time:

Because the K/Ar dating technique relies on the determining the absolute abundances of both 40Ar and potassium, there is not a reliable way to determine if the assumptions are valid. Argon loss and excess argon are two common problems that may cause erroneous ages to be determined. Argon loss occurs when radiogenic 40Ar (40Ar*) produced within a rock/mineral escapes sometime after its formation. Alteration and high temperature can damage a rock/mineral lattice sufficiently to allow40Ar* to be released. This can cause the calculated K/Ar age to be younger than the “true” age of the dated material. Conversely, excess argon (40ArE) can cause the calculated K/Ar age to be older than the “true” age of the dated material. Excess argon is simply 40Ar that is attributed to radiogenic 40Ar and/or atmospheric 40Ar. Excess argon may be derived from the mantle, as bubbles trapped in a melt, in the case of a magma. Or it could be a xenocryst/xenolith trapped in a magma/lava during emplacement. (Link).

Calibration:

There is also another interesting feature about K/Ar dating. Different kinds of rocks and crystals absorb or retain argon at different rates. So, which types of crystals are chosen to produce the “correct” age for the rock? Well, it’s rather subjective. For example, concerning the use of glauconites for K-Ar dating, Faure (1986, p. 78) writes, “The results have been confusing because only the most highly evolved glauconies have yielded dates that are compatible with the biostrategraphic ages of their host rocks whereas many others have yielded lower dates. Therefore, K-Ar dates of ‘glauconite’ have often been regarded as minimum dates that underestimate the depositional age of their host.” In other words, the choice of the “correct” clock to use is the one that best matches what one wants the clock to say. It seems to me that this is just a bit subjective and circular.

Summary of K/Ar Dating:

- 40K decays into 40Ar gas at a fairly constant and predictable rate – given the evidence that is currently in hand.

- Most of the time volcanic lavas release all or almost all of the 40Ar gas as are left with essentially pure 40K as a staring point.

- Lavas that cool more rapidly than usual retain some 40Ar gas and therefore show a small increase in apparent age which is fairly insignificant relatively speaking (usually less than 1 Ma).

- However, 40Ar degassing is inversely related to the rate of cooling and the degree of hydrostatic pressure in the surrounding environment.

- Increased hydrostatic pressure and rates of cooling explain why more and more 40Ar gas is retained by lavas produced underwater at greater and greater depths, consistently producing significantly elevated “apparent ages” running into the tens or hundreds of millions of years.

- Significant amounts of 40Ar gas can also be driven into preformed rocks and crystals from the surrounding environment under high pressure conditions – producing a false increase in apparent age running into the tens or hundreds or even billions of years. This feature is being discovered to be a fairly common problem.

The Argon-Argon Method:

The Argon-Argon dating method is also not an independent dating method, but must first be calibrated against other dating methods: At best, then it is a relative dating method. Consider the following explanation from the New Mexico Geochronology Research Laboratory:

“Because this (primary) standard ultimately cannot be determined by 40Ar/39Ar, it must be first determined by another isotopic dating method. The method most commonly used to date the primary standard is the conventional K/Ar technique. . . Once an accurate and precise age is determined for the primary standard, other minerals can be dated relative to it by the 40Ar/39Ar method. These secondary minerals are often more convenient to date by the 40Ar/39Ar technique (e.g. sanidine). However, while it is often easy to determine the age of the primary standard by the K/Ar method, it is difficult for different dating laboratories to agree on the final age. Likewise . . . the K/Ar ages are not always reproducible. This imprecision (and inaccuracy) is transferred to the secondary minerals used daily by the 40Ar/39Ar technique.” ( Link )

The Uranium-Lead Dating Method:

Introduction:

Of the various radiometric methods, uranium-thorium-lead (U-Th-Pb) was the first used and it is still widely employed today, particularly when zircons are present in the rocks to be dated. However, the basic concept of Uranium-Lead (U-Pb) dating method is the same as all the other radiometric dating methods. Through a long series of intermediate isotopes, radioactive uranium-238 eventually decays into lead-206, which is stable, not radioactive, and therefore does not decay into anything else. Right off the bat, however, things are a little less straightforward as compared to K-Ar dating. This is because, unlike K-Ar dating, U-Pb dating doesn’t start off with pure uranium and no lead. In other words, there is a mixture, right from the start, of both “parent” and “daughter” isotopes. How then can anyone know when the clock started ticking? – even in theory? Well, it’s based on something called an “isochron.”

Of the various radiometric methods, uranium-thorium-lead (U-Th-Pb) was the first used and it is still widely employed today, particularly when zircons are present in the rocks to be dated. However, the basic concept of Uranium-Lead (U-Pb) dating method is the same as all the other radiometric dating methods. Through a long series of intermediate isotopes, radioactive uranium-238 eventually decays into lead-206, which is stable, not radioactive, and therefore does not decay into anything else. Right off the bat, however, things are a little less straightforward as compared to K-Ar dating. This is because, unlike K-Ar dating, U-Pb dating doesn’t start off with pure uranium and no lead. In other words, there is a mixture, right from the start, of both “parent” and “daughter” isotopes. How then can anyone know when the clock started ticking? – even in theory? Well, it’s based on something called an “isochron.”

Isochrons:

Overview:

The word “isochron” basically means “same age”. Isochron dating is based on the ability to draw a straight line between data points that are thought to have formed at the same time. The slope of this line is used to calculate an age of the sample in isochron radiometric dating. The isochron method of dating is perhaps the most logically sound of all the dating methods – at first approximation. This method seems to have internal measures to weed out those specimens that are not adequate for radiometric evaluation. Also, the various isochron dating systems seem to eliminate the problem of not knowing how much daughter element was present when the rock formed.

Isochron dating is unique in that it goes beyond measurements of parent and daughter isotopes to calculate the age of the sample based on a simple ratio of parent to daughter isotopes and a decay rate constant – plus one other key measurement. What is needed is a measurement of a second isotope of the same element as the daughter isotope. Also, several different measurements are needed from various locations and materials within the specimen. This is different from the normal single point test used with the other “generic” methods. To make the straight line needed for isochron dating each group of measurements (parent – P, daughter – D, daughter isotope – Di) is plotted as a data point on a graph. The X-axis on the graph is the ratio of P to Di. For example, consider the following isochron graph:

Obviously, if a line were drawn between these data points on the graph, there would be a very nice straight line with a positive slope. Such a straight line would seem to indicate a strong correlation between the amount of P in each sample and the extent to which the sample is enriched in D relative to Di. Obviously one would expect an increase in the ratio of D as compared with Di over time because P is constantly decaying into D, but not into Di. So, Di stays the same while D increases over time.

But, what if the original rock was homogenous when it was made? What if all the minerals were evenly distributed throughout, atom for atom? What would an isochron of this rock look like? It would look like a single dot on the graph. Why? Because, any testing of any portion of the object would give the same results.

The funny thing is, as rocks cool, different minerals within the rock attract certain atoms more than others. Because of this, certain mineral crystals within a rock will incorporate different elements into their structure based on their chemical differences. However, since isotopes of the same element have the same chemical properties, there will be no preference in the inclusion of any one isotope over any other in any particular crystalline mineral as it forms. Thus, each crystal will have the same D/Di ratio of the original source material. So, when put on an isochron graph, each mineral will have the same Y-value. However, the P element is chemically different from the D/Di element. Therefore, different minerals will select different ratios of P as compared with D/Di. Such variations in P to D/Di ratios in different elements would be plotted on an isochron graph as a straight, flat line (no slope).

Since a perfectly horizontal line is likely obtained from a rock as soon as it solidifies, such a horizontal line is consistent with a “zero age.” In this way, even if the daughter element is present initially when the rock is formed, its presence does not necessarily invalidate the clock. The passage of time might still be able to be determined based on changes in the slope of this horizontal line.

As time passes, P decays into D in each sample. That means that P decreases while D increases. This results in a movement of the data points. Each data point moves to the left (decrease in P) and upwards (increase in D). Since radioactive decay proceeds in a proportional manner, the data points with the most P will move the most in a given amount of time. Thus, the data points maintain their linear arrangement over time as the slope between them increases. The degree of slope can then be used to calculate the time since the line was horizontal or “newly formed”. The slope created by these points is the age and the intercept is the initial daughter ratio. The scheme is both mathematically and theoretically sound – given that one is working with a truly closed system.

The nice thing about isochrons is that they would seem to be able to detect any sort of contamination of the specimen over time. If any data point became contaminated by outside material, it would no longer find itself in such a nice linear pattern. Thus, isochrons do indeed seem to contain somewhat of an internal indicator or control for contamination that indicates the general suitability or unsuitability of a specimen for dating.

So, it is starting to look like isochron dating has solved some of the major problems of other dating methods. However, isochron dating is still based on key certain assumptions:

- All areas of a given specimen formed at the same time

- The specimen was entirely homogenous when it formed (not layered or incompletely mixed)

- Limited Contamination (contamination can form straight lines that are misleading)

- Isochrons that are based on intra-specimen crystals can be extrapolated to date the whole specimen

Given these assumptions and the above discussion on isochron dating, some interesting problems arise as one considers certain published isochron dates. As it turns out, up to “90%” of all published dates based on isochrons are “whole-rock” isochrons (Link).

So, what exactly is a whole-rock isochron? Whole-rock isochrons are isochrons that are based, not on intra-rock crystals, but on variations in the non-crystalline portions of a given rock. In other words, sample variations in P are found in different parts of the same rock without being involved with crystalline matrix uptake. This is a problem because the basis of isochron dating is founded on the assumption of original homogeny. If the rock, when it formed, was originally homogenous, then the P element would be equally distributed throughout. Over time, this homogeny would not change. Thus, any such whole-rock variations in P at some later time would mean that the original rock was never homogenous when it formed. Because of this problem, whole-rock isochrons are invalid, representing the original incomplete mixing of two or more sources.

Interestingly enough, whole rock isochrons can be used as a test to see if the sample shows evidence of mixing. If there is a variation in the P values of a whole rock isochron, then any isochron obtained via crystal based studies will be automatically invalid. The P values of various whole-rock samples must all be the same, falling on a single point on the graph. If such whole-rock samples are identical as far as their P values, mixing would still not be ruled out completely, but at least all available tests to detect mixing would have been satisfied. And yet, such whole-rock isochrons are commonly published. For example, many isochrons used to date meteorites are most probably the result of mixing since they are based on whole-rock analysis, not on crystalline analysis (Link).

There are also methods used to detect the presence of mixing with crystalline isochron analysis. If a certain correlation is present, the isochron may be caused by a mixing. However, even if the correlation is present, it does not mean the isochron is caused by a mixing, and even if the correlation is absent, the isochron could still be caused by a more complex mixing (Woodmorappe, 1999, pp. 69-71). Therefore such tests are of questionable value.

Also, using a “whole-rock” to obtain a date ignores a well-known fractionation problem for the formation of igneous rocks. As originally noted by Elaine Kennedy (Geoscience Reports, Spring 1997, No. 22, p.8):

“Contamination and fractionation issues are frankly acknowledged by the geologic community (Faure, 1986). For example, if a magma chamber does not have homogeneously mixed isotopes, lighter daughter products could accumulate in the upper portion of the chamber. If this occurs, initial volcanic eruptions would have a preponderance of daughter products relative to the parent isotopes. Such a distribution would give the appearance of age. As the magma chamber is depleted in daughter products, subsequent lava flows and ash beds would have younger dates.

Such a scenario does not answer all of the questions or solve all of the problems that radiometric dating poses for those who believe the Genesis account of Creation and the Flood. It does suggest at least one aspect of the problem that could be researched more thoroughly.”

This is also interesting in light of the work of Robert B. Hayes published in a 2017 paper (Link) about the fact that different isotopes or different types of atoms move around at different rates within a rock. This is known as “differential mass diffusion.” For example, strontium-86 atoms are smaller than strontium-87 or rubidium, meaning they will spread through surrounding rock faster, and that differential may be influenced further by the properties of the sample itself – which produces an “isotope effect”. The isotope effect of isotopes with smaller masses moving around faster than those with larger masses produces “concentration gradients” of one isotope compared to the other when there are no contributions from radioactive decay. In addition, the rate of diffusion is also influenced by numerous physical factors of rock itself: such as “the type of rock, the number of cracks, the amount of surface area, and so on.” (Link)

This is also interesting in light of the work of Robert B. Hayes published in a 2017 paper (Link) about the fact that different isotopes or different types of atoms move around at different rates within a rock. This is known as “differential mass diffusion.” For example, strontium-86 atoms are smaller than strontium-87 or rubidium, meaning they will spread through surrounding rock faster, and that differential may be influenced further by the properties of the sample itself – which produces an “isotope effect”. The isotope effect of isotopes with smaller masses moving around faster than those with larger masses produces “concentration gradients” of one isotope compared to the other when there are no contributions from radioactive decay. In addition, the rate of diffusion is also influenced by numerous physical factors of rock itself: such as “the type of rock, the number of cracks, the amount of surface area, and so on.” (Link)

Yet again, this means that these rocks or crystals within rocks that are radiometrically dated aren’t really “closed systems” – which is a real problem when it comes to reliably determining the “ages” of rocks. Hayes concludes:

The process as it’s currently applied, is likely to overestimate the age of samples, and considering scientists have been using it for decades, our understanding of Earth’s ancient timeline could be worryingly inaccurate. If we don’t account for differential mass diffusion, we really have no idea how accurate a radioisotope date actually is. (Link)

As far as the degree of inaccuracy regarding such potential “overestimates” of the ages of rocks, consider lava flows from volcanoes that erupted after the Grand Canyon was already formed. These lava flows formed temporary dams that blocked the flow of the Colorado River before collapsing catastrophically, releasing huge walls of water and causing very rapid erosion of the downstream canyon system. In any case, it is most interesting to note that these lava flows have been dated by K-Ar techniques to between 500,000 years to 1 million years old. Yet, these same lava flows date to 1.143 Ma via the Rb-Sr isochron method of radiometric dating – very similar to the Rb-Sr isochron “ages” of the very oldest basaltic rocks in the bottom of the Grand Canyon (Austin 1994; Snelling 2005c; Oard and Reed, 2009). Some have argued that this dramatic age discrepancy is perhaps due to inherited Rb-Sr ages from their mantle source, deep beneath the Grand Canyon region. However, this argument could also be used to claim that all of the basalts in this region inherited their Rb-Sr “ages” from the very same mantle source – making them all effectively meaningless as far as age determination is concerned. After all, dates of these very same basalts calculated via the helium diffusion method yielded an age of just 6000 years old. How reliable then can any of it be since all of these rocks are rally very open systems? – subject to extensive loss and/or gain of very mobile isotopes.

Geologists Starting to Question the Reliability of Isochrons:

Interestingly, mainstream scientists are also starting to question the validity of isochron dating. In January of 2005, four geologists from the UK, Wisconsin and California, in Geology, wrote:

The determination of accurate and precise isochron ages for igneous rocks requires that the initial isotope ratios of the analyzed minerals are identical at the time of eruption or emplacement. Studies of young volcanic rocks at the mineral scale have shown this assumption to be invalid in many instances. Variations in initial isotope ratios can result in erroneous or imprecise ages. Nevertheless, it is possible for initial isotope ratio variation to be obscured in a statistically acceptable isochron. Independent age determinations and critical appraisal of petrography are needed to evaluate isotope data. . .

[For accurate results, the geologist also has to know that the formation of] plutonic rocks requires (1) slow diffusion, the rates of which depend on the element and mineral of interest, and (2) relatively rapid cooling—or, more strictly, low integrated temperature-time histories relative to the half-life of the isotopic system used. The cooling history will depend on the volume of magma involved and its starting temperature, which in turn is a function of its composition. . .

If the initial variation is systematic (e.g., due to open-system mixing or contamination), then isochrons are generated that can be very good [based on their fit to the graph], but the ages are geologically meaningless…

The occurrence of significant isotope variation among mineral phases in Holocene volcanic rocks questions a fundamental tenet in isochron geochronology—that the initial isotope composition of the analyzed phases is identical. If variations in isotopic composition are common among the components (crystals and melt) of zero-age rocks, should we not expect similar characteristics of older rocks? We explore the consequence of initial isotope variability and the possibility that it may compromise geochronological interpretations. . .

SUMMARY

- The common observation of significant variation in 87Sr/86Sri among components of zero-age rocks suggests that the assumption of a constant 87Sr/86Sri ratio in isochron analysis of ancient rocks may not be valid in many instances.

- Statistical methods may not be able to distinguish between constant or variable 87Sr/86Sri ratios, particularly as rocks become older or if the 87Sr/86Sri ratio is correlated with the 87Rb/86Sr ratio as a consequence of petrogenetic processes.

- Independent ages are needed to evaluate rock-component isochrons. If they do not agree, then the age-corrected 87Sr/86Sri ratios of the rock components (minerals, melt inclusions, groundmass) may constrain differentiation mechanisms such as contamination and mixing [if they can be corrected by independent means].

Davidson, Charlier, Hora, and Perlroth, “Mineral isochrons and isotopic fingerprinting: Pitfalls and promises,” Geology, (2005) Vol. 33, No. 1, pp. 29-32 [Emphasis Added] (Link)

In short, isochron dating is not the independent dating method that it was once thought. As with the other dating methods discussed already, isochron dating is also dependent upon “independent age determinations”.

Isochrons have been touted by the uniformitarians as a fail-safe method for dating rocks, because the data points are supposed to be self-checking (Kenneth Miller used this argument in a debate against Henry Morris years ago.) Now, geologists, publishing in the premiere geological journal in the world, are telling us that isochrons can look perfect on paper yet give meaningless ages, by orders of magnitude, if the initial conditions are not known, or if the rocks were open systems at some time in the past.

Isochrons have been touted by the uniformitarians as a fail-safe method for dating rocks, because the data points are supposed to be self-checking (Kenneth Miller used this argument in a debate against Henry Morris years ago.) Now, geologists, publishing in the premiere geological journal in the world, are telling us that isochrons can look perfect on paper yet give meaningless ages, by orders of magnitude, if the initial conditions are not known, or if the rocks were open systems at some time in the past.

But geologists still try to put a happy face on the situation. It’s not all bad news, they say, because if the geologist can know the true age by another method, some useful information may be gleaned out of the errors. The problem is that it is starting to get really difficult to find a truly independent dating method out of all the various dating methods available. This is because most other radiometric dating methods, with exceptions to include potassium-argon, zircon, fission track, and Carbon-14 dating methods, require the use of the isochron method.

Zircons:

Overview:

Overview:

John Strutt was the first to attempt dating zircon crystals (Strutt, 1909). Arthur Holmes, a graduate student of Strutt at Imperial College, argued that the most reliable way to determine ages would be to measure Pb accumulation in high-U minerals – such as zircons (Holmes, 1911). For fifty years, U-Pb ages were determined by chemical analyses of total U and Pb contents of zircons and other crystals (Link). Isochron dating was developed some time later.

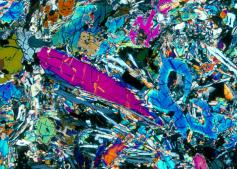

Zircons are crystals, found in most igneous rocks, that preferentially incorporate uranium and exclude lead. Theoretically, this would be a significant advantage in uranium-lead dating because, as with potassium-argon dating, any lead subsequently discovered within the crystalline lattice of a zircon crystal would had to have come from the radioactive decay of uranium – which would make it a very good clock. Also, the “closing temperature” of the zircon crystal is rather high at 900°C. This, together with the fact that zircons are very hard, would seem to make it rather difficult to add or remove parent and/or daughter elements from it.

Just a few Problems:

However, this assumption is mistaken due to the fact that the zircon crystal itself undergoes radiation damage over time. The radioactive material contained within the zircon crystalline matrix damages and breaks down this matrix over time. For example, “During the alpha decay steps, the zircon crystal experiences radiation damage, associated with each alpha decay. This damage is most concentrated around the parent isotope (U and Th), expelling the daughter isotope (Pb) from its original position in the zircon lattice. In areas with a high concentration of the parent isotope, damage to the crystal lattice is quite extensive, and will often interconnect to form a network of radiation damaged areas. Fission tracks and micro-cracks within the crystal will further extend this radiation damage network. These fission tracks inevitably act as conduits deep within the crystal, thereby providing a method of transport to facilitate the leaching of lead isotopes from the zircon crystal” (Link).

In fact, this crystalline damage allows not only lead isotopes, but uranium isotopes, which are also water soluble, to leak both in and out of the crystalline matrix over time – according to the surrounding concentration gradient of these various isotopes. In other words, the primary assumption that the lead within zircons is entirely derived from radioactive decay simply isn’t true. Significant quantities of lead can leach into zircons because of this radioactive damage problem.

“Because most upper crustal rocks cooled below annealing temperatures long after their formation, early formed lead rich in 207Pb is locked in annealed sites so that the leachable component is enriched in recently formed 206Pb. The isotopic composition of the leachable lead component then depends more on the cooling history and annealing temperatures of each host mineral than on their geological age”

Thomas Krogh & Donald Davis, Preferential Dissolution of Radiogenic Pb from Alpha Damaged Sites in Annealed Minerals Provides a Mechanism for Fractionating Pb Isotopes in the Hydrosphere, Cambridge Publications, Volume 5(2), 606, 2000 (Link).

“The behavior of their U-Pb isotopic systems during different geological events is sometimes complex, leading to possible misinterpretations if it is not possible to compare the zircon data with data obtained using other geochronological methods.”

, Lead inheritance phenomena related to zircon grain size in the Variscan anatectic granite of Ax-les-Thermes (Pyrenees, France), European Journal of Minerology, 1993 (Link)

What this means is that zircon-based dating is not longer an independent dating method, but must now be confirmed or calibrated by other radiometric dating methods due to the inherent and often undetectable errors involving the gain or loss of parent and/or daughter isotopes.

What is also interesting is that the different uranium isotopes are not equally fractionated (i.e., they don’t enter or leave the zircon at the same rate) and show differences in water solubility:

“238U decays via two very short-lived intermediates to 234U. Since 234U and 238U have the same chemical properties, it might be expected that they would not be fractionated by geological processes. However, Cherdyntsev and co-workers (1965, 1969) showed that such fractionation does occur. In fact, natural waters exhibit a considerable range in 234U/238U activities from unity (secular equilibrium) to values of 10 or more (e.g. Osmond and Cowart, 1982). Cherdyntsev et al. (1961) attributed these fractionations to radiation damage of crystal lattices, caused both by ” emission and by recoil of parent nuclides. In addition, radioactive decay may leave 234U in a more soluble +6 charge state than its parent (Rosholt et al., 1963). These processes (termed the ‘hot atom’ effect) facilitate preferential leaching of the two very short-lived intermediates and the longer-lived 234U nuclide into groundwater. The short-lived nuclides have a high probability of decaying into 234U before they can be adsorbed onto a substrate, and 234U is itself stabilised in surface waters as the soluble UO2++ ion, due to the generally oxidising conditions prevalent in the hydrosphere.” (Link)

And, this diffusion problem is, of course, directly rated to heat. In other words, the hotter the rock/crystal, the faster the diffusion process.

“This is dramatically illustrated by the contact metamorphic effects of a Tertiary granite stock on zircon crystals in surrounding regionally metamorphosed Precambrian sediments and volcanics. Within 50 feet of the contact, the 206Pb concentration drops from 150 ppm to 32 ppm, with a corresponding drop in 238U ‘ages’ from 1405 Ma to 220 Ma” (Link).

Even when there is no visual evidence of crystal disruption within the zircon, research published in 2015 by Piazolo et. al., demonstrated significant motility of various isotopes within zircons. They note that it is a “fundamental assumption” of zircon dating that trace elements do not diffuse or only move “negligible distances” through a “pristine lattice” within the crystal being examined. However, their research shows that this assumption simply isn’t true – even when the crystalline lattice is “pristine”:

For example, the reliable use of the mineral zircon (ZrSiO4) as a U-Th-Pb geochronometer and trace element monitor requires minimal radiogenic isotope and trace element mobility. Here, using atom probe tomography, we document the effects of crystal–plastic deformation on atomic-scale elemental distributions in zircon revealing sub-micrometre-scale mechanisms of trace element mobility. Dislocations that move through the lattice accumulate U and other trace elements. Pipe diffusion along dislocation arrays connected to a chemical or structural sink results in continuous removal of selected elements (for example, Pb), even after deformation has ceased…

Although experimental determination of diffusion rates within pristine zircons shows that substantial Pb diffusion should only occur at extreme temperatures, there is some evidence that Pb diffusion can take place at lower temperatures. This is often attributed to the annealing of regions of radiation damage within the crystalline lattice. Such damaged (metamict) domains are only partially crystalline, may be porous, and are usually cited as the cause of either relative Pb-loss (discordance) or Pb-gain (reverse discordance) recorded on the micrometre scale…

Nearby solute atoms are attracted by the strain field associated with the dislocation from a region that we describe here as a ‘capture zone’. The size of this zone varies between elements since it depends on the lattice diffusion rate, which is affected by temperature, the relative sizes of the solute and matrix atoms and the bonding type… Additional substantial element mobility may occur through pipe diffusion, the process of relatively rapid diffusion of atoms along dislocation cores…

This process must be ongoing throughout the history of this sample, even after the deformation event and at lower temperatures. This is unequivocal evidence for pipe diffusion along a dislocation array in zircon, resulting in relatively fast and continuous redistribution of Pb over >10 μm [resulting in the production of nanospheres of pure metallic lead – see arrows in the photograph to the right]…

Reverse discordance has been the subject of a number of studies and is generally observed in high-U zircons (above a threshold of ~2,500 p.p.m. U). The phenomenon has been attributed to possible matrix effects, causing increased relative sputtering of Pb from high-U, metamict regions and resulting in a 1–3% increase in 206Pb/238U ages for every 1,000 p.p.m. U. However, in the zircon analysed here, the relationship between U content and reverse discordancy is not simple: several points show a degree of reverse discordance despite having U concentrations below 2,000 p.p.m., whereas the highest measured reverse discordancy (21%) is from a location with 3,102 p.p.m. U, only just above the threshold for which reverse discordancy is normally attributable to matrix effects. Different to analyses exhibiting some degree of reverse discordance, we interpret that the chemical signal of spot 2.8 represents a domain that is completely metamict. Although we cannot rule out some matrix effects in high-U, metamict zones, the complex relationship between U content and reverse discordancy in this zircon is further evidence for an additional process of Pb-enrichment—namely the pipe diffusion of Pb along dislocation arrays into adjacent metamict zones… Radiation damage may enhance this pipe diffusion/clustering behaviour…

Our results demonstrate the importance of deformation processes and microstructures on the localized trace element concentrations and continuous redistribution from the nanometre to micrometre scale in the mineral zircon… [and] have important implications for the use of zircon as a geochronometer, and highlight the importance of deformation on trace element redistribution in minerals and engineering materials… Dislocation movement through the zircon lattice can effectively sweep up and concentrate solute atoms at geological strain rates. Dislocation arrays can act as fast pathways for the diffusion of incompatible elements such as Pb across distances of >10 μm if they are connected to a chemical or structural sink. Hence, nominally immobile elements can become locally extremely mobile. Not only does our study confirm recent speculation that an understanding of the deformation microstructures within zircon grains is a necessity for subsequent, robust geochronological analyses but it also sheds light on potential pit-falls when utilizing element concentrations and ratios for geological studies.

Sandra Piazolo et. al., Deformation-induced trace element redistribution in zircon revealed using atom probe tomography, Nature Communications 7, Article number: 10490, doi:10.1038/ncomms10490 |Received | 31 August 2015 | Accepted | 18 December 2015 | Published | 12 February 2016 (Link)

Such factors can only contribute to the “preferential leaching” of various isotopes from zircons over time:

“238U decays via two very short-lived intermediates to 234U. Since 234U and 238U have the same chemical properties, it might be expected that they would not be fractionated by geological processes. However, Cherdyntsev and co-workers (1965, 1969) showed that such fractionation does occur. In fact, natural waters exhibit a considerable range in 234U/238U activities from unity (secular equilibrium) to values of 10 or more (e.g. Osmond and Cowart, 1982). Cherdyntsev et al. (1961) attributed these fractionations to radiation damage of crystal lattices, caused both by ” emission and by recoil of parent nuclides. In addition, radioactive decay may leave 234U in a more soluble +6 charge state than its parent (Rosholt et al., 1963). These processes (termed the ‘hot atom’ effect) facilitate preferential leaching of the two very short-lived intermediates and the longer-lived 234U nuclide into groundwater. The short-lived nuclides have a high probability of decaying into 234U before they can be adsorbed onto a substrate, and 234U is itself stabilised in surface waters as the soluble UO2++ ion, due to the generally oxidising conditions prevalent in the hydrosphere.” (Link)

Consider also that in a 2011 study, researchers led by geologist Birger Rasmussen of Curtin University in Bentley, Australia, analyzed more than 7000 zircons from a portion of the Jack Hills of Western Australia, where rocks are between 2.65 billion and 3.05 billion years old:

A total of 485 zircons held inclusions, and about a dozen or so of these contained radioactive trace elements that allowed the researchers to determine their ages. Those ages fell into two clumps—one of about 2.68 billion years and another of about 800 million years. “This was a big surprise to us,” Rasmussen says, especially because the zircons themselves ranged in age from 3.34 billion and 4.24 billion years old.

Rather than matching the ages of the zircons, the researchers note, the ages of the inclusions matched the ages of the metamorphic minerals surrounding the zircons. Some of those inclusions lie along hairline fractures in the zircons, a route by which mineral-rich fluids could have infiltrated, Rasmussen says. But other inclusions appear to be entirely enclosed. In those cases, the fluids may have traveled along defects in the zircon’s crystal structure caused by radioactive decay or along pathways that are either too small to see or oriented such that they’re invisible.

In recent years, some researchers have used analyses of zircons and their inclusions—and in particular, the temperatures and pressures they’ve been exposed to since their formation—to infer the presence of oceans or of modern-style plate tectonics on Earth more than 4 billion years ago, well before previously suspected, Rasmussen says. But based on the team’s new findings, which will be reported next month in Geology, those conclusions are suspect, he notes.

“This paper will stir people up,” says Ian Williams, an isotope geochemist at Australian National University in Canberra. “These results make it much less likely that Jack Hills zircons were involved in plate tectonics.” The team’s results “suggest that analyses of zircon inclusions can’t be trusted much at all,” adds Jonathan Patchett, an isotope geochemist at the University of Arizona in Tucson. “This is really nice work, very strong.” (Link)

Summary:

What this means is that:

- Zircon crystals are open systems that become more and more open over time in line with the degree of radioactive material that they contain and the corresponding radiation damage that takes place.

- Various isotopes, to include uranium and lead isotopes, can move around fairly rapidly within apparently “pristine” zircons – and probably back and forth between zircons and the surrounding igneous rock.

- Zircon dating methods are not independent and must be verified or calibrated against other radiometric dating methods.

- “Old zircons” can be incorporated into “new zircons” without a clear distinction.

- It seems then that such systems cannot be used as independently reliable clocks over long periods of time.

Cosmogenic Isotope Dating:

As another example, consider that 3H levels (from decay of a cosmogenic nuclide, 36Cl, produced by the interaction of cosmic rays with the nucleus of an atom) has been used to establish the theory that the driest desert on Earth, Coastal Range of the Atacama desert in northern Chile (which is 20 time drier than Death Valley) has been without any rain or significant moisture of any kind for around 25 million years. The only problem with this theory is that investigators have since discovered fairly extensive deposits of very well preserved animal droppings associated with grasses as well as human-produced artifacts such as arrowheads and the like. Radiocarbon dating of these finding indicate very active life in at least semiarid conditions within the past 11,000 years – a far cry from 25 million years. So, what happened?

As it turns out, cosmogenic isotope dating has a host of problems. The production rate is a huge issue. Production rates depend upon several factors to include “latitude, altitude, surface erosion rates, sample composition, depth of sample, variations of cosmic and solar ray flux, inclusion of other radioactive elements and their contribution to target nucleotide production, variations in the geomagnetic field, muon capture reactions, various shielding effects, and, of course, the reliability of the calibration methods used.”

As it turns out, cosmogenic isotope dating has a host of problems. The production rate is a huge issue. Production rates depend upon several factors to include “latitude, altitude, surface erosion rates, sample composition, depth of sample, variations of cosmic and solar ray flux, inclusion of other radioactive elements and their contribution to target nucleotide production, variations in the geomagnetic field, muon capture reactions, various shielding effects, and, of course, the reliability of the calibration methods used.”

So many variables become somewhat problematic. This problem has been highlighted by certain studies that have evaluated the published production rates of certain isotopes which have been published by different groups of scientists. At least regarding 36Cl in particular, there has been “no consistent pattern of variance seen between each respective research group’s production rates” (Swanson 2001). To put it differently, “different analytical approaches at different localities were used to work out 36Cl production rates, which are discordant.” ( See also: CRONUS-Earth project, Link – last accessed March 2009)

In short, it doesn’t inspire one with a great deal of confidence in the unbiased reliability of cosmogenic isotopic dating techniques and only adds to the conclusion that different dating methods do not generally agree with each other unless they are first calibrated against each other.

Fission Track Dating:

Overview:

Overview:

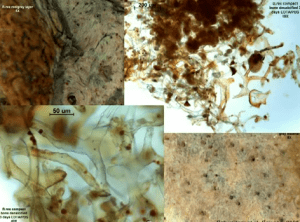

Fission track dating is a radioisotopic dating method that depends on the tendency of uranium (Uranium-238) to undergo spontaneous fission as well as the usual decay process. The large amount of energy released in the fission process ejects the two nuclear fragments into the surrounding crystalline material, causing damage that produces linear paths called fission tracks. The number of these tracks, generally 10-20 µ in length, is a function of the initial uranium content of the sample and of time. These tracks can be made visible under light microscopy by etching with an acid solution so they can then be counted.

The usefulness of this as a dating technique stems from the tendency of some materials to lose their fission-track records when heated, thus producing samples that contain fission-tracks produced since they last cooled down. The useful age range of this technique is thought to range from 100 years to 100 million years before present (BP), although error estimates are difficult to assess and are rarely given. Generally it is thought to be most useful for dating in the window between 30,000 and 100,000 years BP.

A problem with fission-track dating is that the rates of spontaneous fission are very slow, requiring the presence of a fairly significant amount of uranium in a sample to produce useful numbers of tracks over time. Additionally, variations in uranium content within a sample can lead to large variations in fission track counts in different sections of the same sample.

Calibration:

Because of such potential errors, most forms of fission track dating use a form of calibration or “comparison of spontaneous and induced fission track density against a standard of known age. The principle involved is no different from that used in many methods of analytical chemistry, where comparison to a standard eliminates some of the more poorly controlled variables. In the zeta method, the dose, cross section, and spontaneous fission decay constant, and uranium isotope ratio are combined into a single constant.” (Link)

“Each dosimeter glass is calibrated repeatedly against zircon age standards from the Fish Canyon and Bishop tuffs, the Tardree rhyolite and Southern African kimberlites, to obtain empirical calibration factors ζ.” (Link)

“Zircon fission track ages, in agreement with independent K-Ar ages, are obtained by calculating the same track count data with each of the preferred values of λf (λf = 7.03 × 10−17yr−1 and 8.46 × 10−17yr−1) together with appropriate, selected neutron dosimetry schemes. An alternative approach is presented, formally relating unknown ages of samples to known ages of standards, either by direct comparison of standard and sample track densities, or by the repeated calibration of a glass against age standards.” (Link)

Of course, this means that the fission track dating method is not an independent method of radiometric dating, but is dependent upon the reliability of other dating methods – particular zircon-age standards usually derived from K-Ar, Ar-Ar, Rb-Sr, or U-Pb dating methods. The reason for this is also at least partly due to the fact that the actual rate of fission track production is debatable. Some experts suggest using a rate constant of 6.85×10-17 yr-1 while others recommend using a rate of 8.46×10-17 yr-1 (G. A. Wagner, Letters to Nature, June 16, 1977). This difference might not seem like much, but when it comes to dates of over one or two million years, this difference amounts to about 25-30% in the estimated age value. In other words, the actual rate of fission track production isn’t really known, nor is it known if this rate can be affected by various concentrations of U238 or other physical factors. For example, all fission reactions produce neutrons. What happens if fission from some other radioactive element, like U234 or some other radioisotope, produces tracks? Might not these trackways be easily confused with those created by fission of U238?

The human element is also important here. Fission trackways have to be manually counted. This is problematic since interpreting what is and what is not a true trackway isn’t easy. Geologists themselves recognize the problem of mistaking non-trackway imperfections as fission tracks. “Microlites and vesicles in the glass etch out in much the same way as tracks” (Link). Of course, there are ways to avoid some of these potential pitfalls. For example, it is recommended that one choose samples with as few vesicles and microlites as possible. But, how is one to do this if they are so easily confused with true trackways? Fortunately, there are a few other “hints”. True tracks are straight, never curved. They also tend to show characteristic ends that demonstrate “younging” of the etched track. True tracks are thought to form randomly and have a random orientation. Therefore, trackways that show a distribution pattern tend not to be trusted as being “true”. Certain color and size patterns within a certain range are also used as helpful hints. Yet, even with all these hints in place, it has been shown that different people count the same trackways differently – up to 20% differently (Link). Add up the human error with the error of fission track rate and we are suddenly up to a range of error of 50% or so.

Consider also that In 2000, Raymond Jonckheere and Gunther Wagner (American Minerologist, 2000) published results showing that there are two kinds of real fission trackways that had “not been identified previously.” The first type of trackway identified is a “stable” track and the second type is produced through fluid inclusions. As it turns out, the “stable tracks do not shorten significantly even when heated to temperatures well above those normally sufficient for complete annealing of fission tracks.” Of course, this means that the “age” of the sample would not represent the time since the last thermal episode as previously thought. The tracks through fluid are also interesting. They are “excessively long”. This is because a fission fragment traveling through a fluid inclusion does so without appreciable energy loss. Such features, if undetected, “can distort the temperature-time paths constructed on the basis of confined fission-track-length measurements.” Again, the authors propose measures to avoid such pitfalls, but this just adds to the complexity of this dating method and calls into question the dates obtained before the publication of this paper (i.e., before 2000).

Add up all of these potential pitfalls and it becomes quite clear as to why calibration with other dating techniques is required in fission track dating. It just isn’t very reliable or accurate by itself. Generally speaking, then, it is no wonder that fission-track dating is in general agreement with Potassium-Argon dating or Uranium-Lead dating on within a given specimen – since the calibration of fission track dating would almost force such agreement.

However, there are still several interesting contradictions, despite calibration. For example, Naeser and Fleischer (Harvard University) showed that, depending upon the calibration method chosen, the calculated age of a given rock (from Cerro de Mercado, Mexico in this case) could be different from each other by a factor of “sixty or more” – – “which give geologically unreasonable ages” (Link).

“In addition, published data concerning the length of fission tracks and the annealing of minerals imply that the basic assumptions used in an alternative procedure, the length reduction-correction method, are also invalid for many crystal types and must be approached with caution unless individually justified for a particular mineral” (Link).

Now that’s pretty significant – being off by a factor of sixty or more? No wonder the authors recommend only going with results that do not provide “geologically unreasonable ages”.

Tektites:

Another example of this sort of error with fission track dating comes in the form of glass globs known as “tektites”. Tektites are thought to be produced when a meteor impacts the Earth. When the massive impact creates a lot of heat, which melts the rocks of the Earth and send them hurtling through the atmosphere at incredible speed. As these fragments travel through the atmosphere, they become super-heated and malleable as they melt to a read-hot glow, and are formed and shaped as they fly along. It is thought that the date of the impact can be dated by using various radiometric dating methods to date the tektites. For example, Australian tektites (known as australites) show K-Ar and fission track ages clustering around 700,000 years. The problem is that their stratigraphic ages show a far different picture. Edmund Gill, of the National Museum of Victoria, Melbourne, while working the Port Campbell area of western Victoria uncovered 14 australite samples in situ above the hardpan soil zone. This zone had been previously dated by the radiocarbon method at seven locales, the oldest dating at only 7,300 radiocarbon years ago (Gill 1965). Charcoal from the same level as that containing specimen 9 yielded a radiocarbon age of 5,700 years. The possibility of transport from an older source area was investigated and ruled out. Since the “Port Campbell australites include the best preserved tektites in the world … any movement of the australites that has occurred … has been gentle and has not covered a great distance” (Gill 1965). Aboriginal implements have been discovered in association with the australites. A fission-track age of 800,000 years and a K-Ar age of 610,000 years for these same australites unavoidably clashes with the obvious stratigraphic and archaeological interpretation of just a few thousand years.

Another example of this sort of error with fission track dating comes in the form of glass globs known as “tektites”. Tektites are thought to be produced when a meteor impacts the Earth. When the massive impact creates a lot of heat, which melts the rocks of the Earth and send them hurtling through the atmosphere at incredible speed. As these fragments travel through the atmosphere, they become super-heated and malleable as they melt to a read-hot glow, and are formed and shaped as they fly along. It is thought that the date of the impact can be dated by using various radiometric dating methods to date the tektites. For example, Australian tektites (known as australites) show K-Ar and fission track ages clustering around 700,000 years. The problem is that their stratigraphic ages show a far different picture. Edmund Gill, of the National Museum of Victoria, Melbourne, while working the Port Campbell area of western Victoria uncovered 14 australite samples in situ above the hardpan soil zone. This zone had been previously dated by the radiocarbon method at seven locales, the oldest dating at only 7,300 radiocarbon years ago (Gill 1965). Charcoal from the same level as that containing specimen 9 yielded a radiocarbon age of 5,700 years. The possibility of transport from an older source area was investigated and ruled out. Since the “Port Campbell australites include the best preserved tektites in the world … any movement of the australites that has occurred … has been gentle and has not covered a great distance” (Gill 1965). Aboriginal implements have been discovered in association with the australites. A fission-track age of 800,000 years and a K-Ar age of 610,000 years for these same australites unavoidably clashes with the obvious stratigraphic and archaeological interpretation of just a few thousand years.

“Hence, geological evidence from the Australian mainland is at variance, both as to infall frequency and age, with K-Ar and fission-track dating” (Lovering et al. 1972). Commenting on the above findings by Lovering and his associates, the editors of the book,Tektites, state that, “in this paper they have built an incontrovertible case for the geologically young age of australite arrival on earth” (Barnes and Barnes 1973, p. 214).

This is problematic. The argument that various radiometric dating methods agree with each other isn’t necessarily true – especially when organic remains that can be Carbon-14 dated are available. Here we have the K-Ar and fission track dating methods agreeing with each other, but disagreeing dramatically with the radiocarbon and historical dating methods (which is not an uncommon situation). These findings suggest that, at least as far as tektites are concerned, the complete loss of 40Ar (and therefore the resetting of the radiometric clock) may not be valid (Clark et al. 1966). It has also been shown that different parts of the same tektite have significantly different K-Ar ages (McDougall and Lovering, 1969). This finding suggests a real disconnect when it comes to the reliability of at least two of the most commonly used radiometric dating techniques (Link).

In short, it seems like fission track dating is tenuous a best – even as a relative dating technique that must first be calibrated against other dating techniques.

Carbon 14 Dating:

Introduction:

All living things on this planet are built upon a carbon backbone so to speak. Carbon is one of the key elements that makes life, as we know it, possible. So, during the lifetime of any living thing, carbon is taken in and used as part of the building blocks of the body of the organism. Since various isotopes of carbon are chemically indistinguishable, both carbon-12 (stable) and carbon-14 radioactive (produce when cosmic rays turn nitrogen-14 in to carbon-14) will both be equally in proportion to the existing ratios of these isotopes within the environment at the time. And, this ratio will be maintained within the tissues of the organism for its entire life. However, when the organism dies, the carbon contained within its tissues not longer interact with the carbon within the surrounding environment. So, the ratio of 12C vs. 14C will increase over time because of the radioactive decay of 14C back into 14N with a relatively short half life of 5730 years.

All living things on this planet are built upon a carbon backbone so to speak. Carbon is one of the key elements that makes life, as we know it, possible. So, during the lifetime of any living thing, carbon is taken in and used as part of the building blocks of the body of the organism. Since various isotopes of carbon are chemically indistinguishable, both carbon-12 (stable) and carbon-14 radioactive (produce when cosmic rays turn nitrogen-14 in to carbon-14) will both be equally in proportion to the existing ratios of these isotopes within the environment at the time. And, this ratio will be maintained within the tissues of the organism for its entire life. However, when the organism dies, the carbon contained within its tissues not longer interact with the carbon within the surrounding environment. So, the ratio of 12C vs. 14C will increase over time because of the radioactive decay of 14C back into 14N with a relatively short half life of 5730 years.

So, given the ratio of atmospheric 14C to 12C one can determine the time of death of a given organism by measuring the remaining amount of 14C within the tissues of the organisms and comparing that amount to the original amount (i.e., the amount that was present within the atmosphere).

Calibration:

It all seems rather straightforward. However, there are a few caveats. For example, the ratio of atmospheric 14C to 12C doesn’t stay the same over time, but changes. Also, there are regional variations in the ratio that must be considered. This is why carbon-14 dating isn’t an entirely independent dating method, but requires calibration against other dating methods – like various historically-derived events and tree-ring dating for instance (Link). Of course, tree ring dating is in turn calibrated by other dating techniques, primarily carbon-14 dating – which is just a bit circular. Also, attempts to use amino acid racemization rates as a dating method with efforts to help to calibrate radiocarbon dating have failed. AAR dating methods have themselves also turned out to require calibration by radiocarbon dating (Link).

It all seems rather straightforward. However, there are a few caveats. For example, the ratio of atmospheric 14C to 12C doesn’t stay the same over time, but changes. Also, there are regional variations in the ratio that must be considered. This is why carbon-14 dating isn’t an entirely independent dating method, but requires calibration against other dating methods – like various historically-derived events and tree-ring dating for instance (Link). Of course, tree ring dating is in turn calibrated by other dating techniques, primarily carbon-14 dating – which is just a bit circular. Also, attempts to use amino acid racemization rates as a dating method with efforts to help to calibrate radiocarbon dating have failed. AAR dating methods have themselves also turned out to require calibration by radiocarbon dating (Link).

Dinosaur Soft Tissue Preservation: